The server that hosts Common Folk Collective also hosts a handful of other sites. As a responsible hosting provider I try to ensure that if anything were to happen to the server all is not lost and I’d be able to get everything up and running again pretty quickly.

One thing I do is take nightly backups of the database server and all of the files. This is a guide to help you do the same.

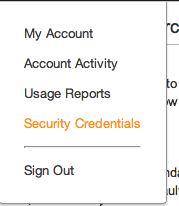

Login into your AWS console. In the upper righthand corner click on “Security Credentials”

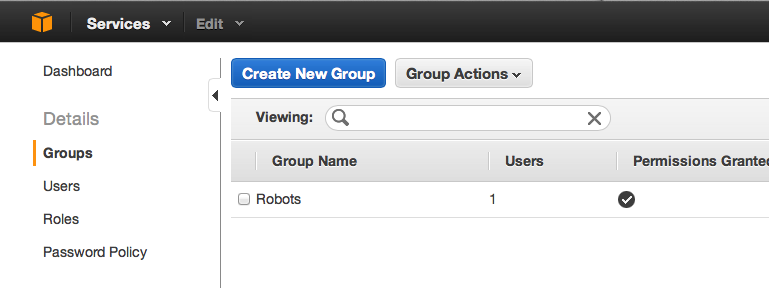

On the left side click on the Groups

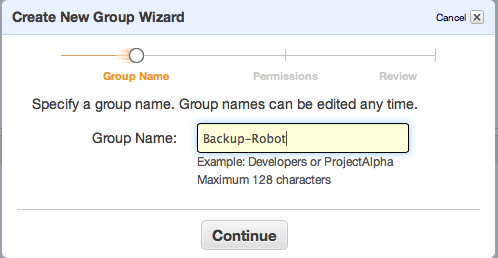

Select “Create a New Group”

Give it a memorable name.

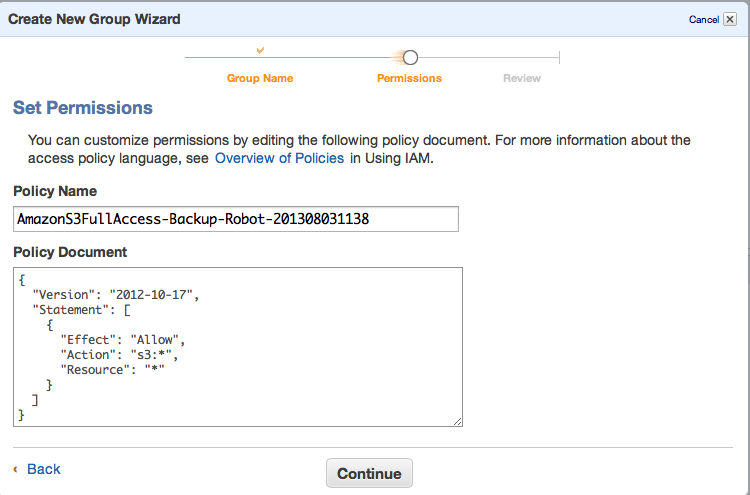

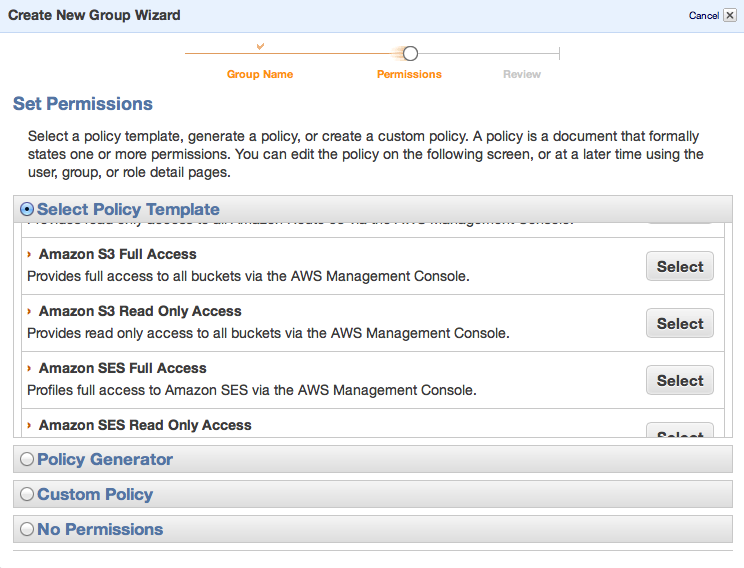

Set “Amazon S3 Full Access”

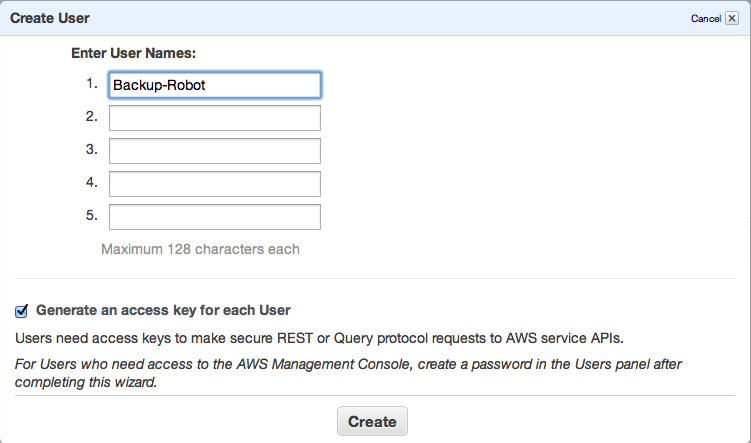

Next you will create a new user.

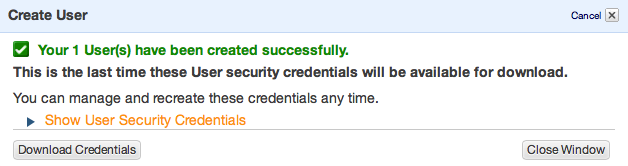

Download the credentials for the new user.

The contents of the file will look something like this:

![]()

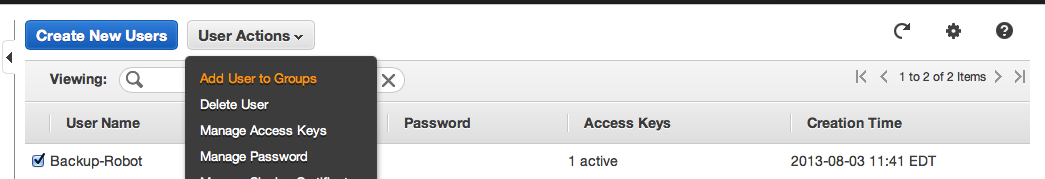

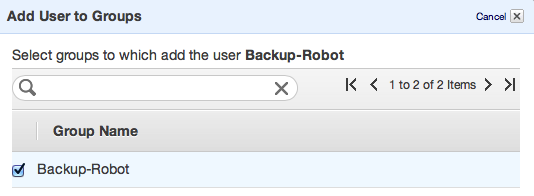

Next add this user to the group you just created.

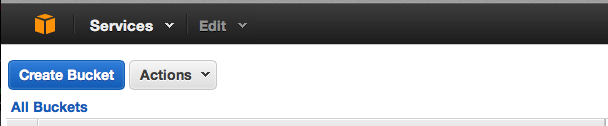

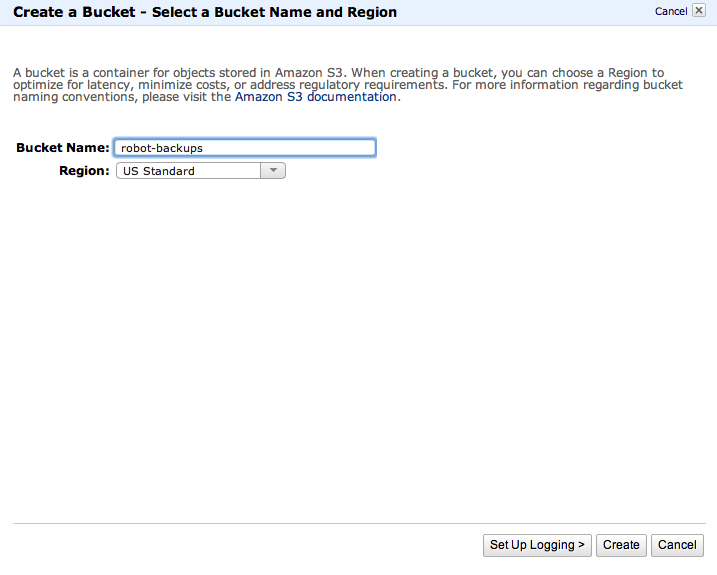

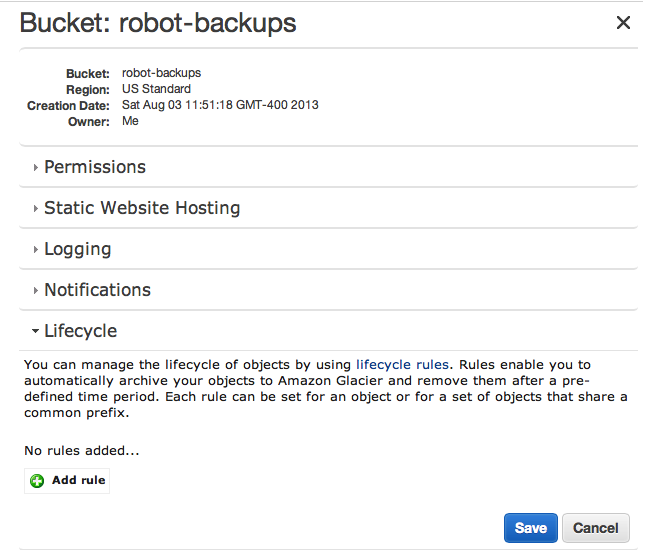

Next you have to create a bucket

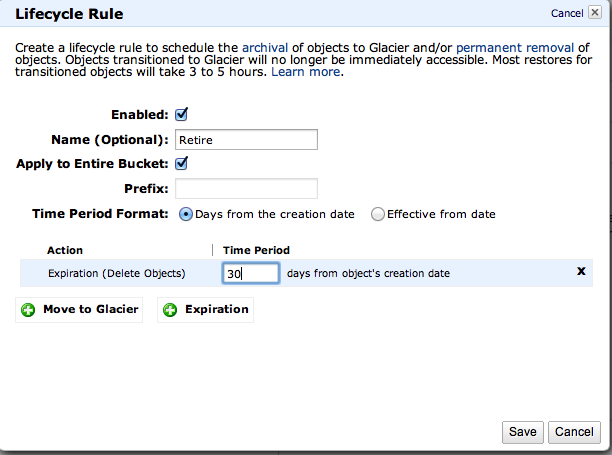

Next you should edit the Lifecycle rules of your backups

Next you should edit the Lifecycle rules of your backups

One of the cool features of S3 is that you can retire backups. This is handy because Amazon charges you per MB. I keep my backups around for 30 days and then they disappear. If you want to save them for longer you may also retire them to Amazon Glacier where they charge much less for storage with the caveat that it will take a few hours to retrieve them.

Now you have everything in place to start backing up. Your first step is to grab a great set of tools to put files on S3 using only bash.

I put these files in /home/ec2-user/bin/ I also added the following script

#!/bin/bash

NOWDATE=`date +%Y-%m-%d-%H-%M`

cd /home/ec2-user/bin/

/usr/bin/mysqldump -u root -pDB_PASSWORD --all-databases > all_db-$NOWDATE.sql

/bin/gzip all_db-$NOWDATE.sql

/home/dan/bin/s3-put -k 20_CHARACTER_STRING -s /home/ec2-user/bin/.robot-key -T all_db-$NOWDATE.sql.gz /robot-backups/all_db-$NOWDATE.sql.gz

/bin/rm all_db-$NOWDATE.sql.gz

/bin/tar czf all_sites-$NOWDATE.tgz /usr/local/www/*

/home/dan/bin/s3-put -k 20_CHARACTER_STRING -s /home/ec2-user/bin/.robot-key -T all_sites-$NOWDATE.tgz /robot-backups/all_sites-$NOWDATE.tgz

/bin/rm all_sites-$NOWDATE.tgz

The 20_CHARACER_STRING is the first string in the credentials you downloaded earlier. For the 40 character string put that into a file named .robot-key.

Don’t forget to chmod 700 on the your backup-file.

Test it once if you’ve its working you’ll see everything show up in your S3 bucket.

Now add a cron job to do it nightly. Run the command “crontab -e” and add the following line:

@daily /home/ec2-user/bin/backup.sh > /dev/null

This should run every night at midnight.

If I’ve missed anything please let me know in the comments.